One of the healthy things about the Software-Defined Networking (SDN) movement has been that WAN and LAN network engineers are finding common ground.

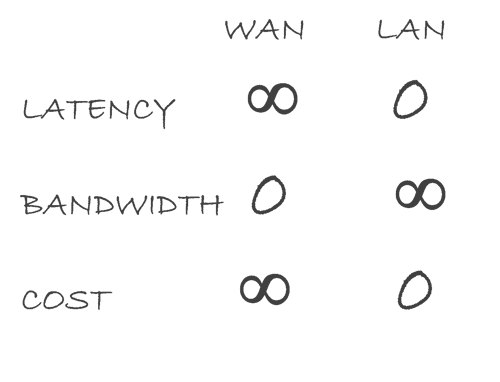

When OpenFlow was introduced, some of its proponents advocated that it could be used to replace expensive, proprietary equipment pretty much everywhere in the network, including WAN routers. Certainly, a lot of Network IT individuals work in both the LAN and the WAN environments, but for some, it’s not so easy to understand the differences. A colleague, Lionel Pelamourgues, drew a table on a napkin for me that neatly lays it out.

Looking at the chart below, it’s no surprise that Lionel is a mathematician. His degree is in quantum physics, but he became interested in computers while working on a simulator for his doctoral thesis, which led to a job writing software, followed by architecture and engineering roles for equipment providers and a Tier 1 Telecom Service Provider.

Of course, bandwidth and the other parameters are never zero or infinite, but from the perspective of someone involved with one or the other, they might as well be. Let me explain.

A hyperscale data center LAN IT individual thinks in terms of latency between two servers in the same equipment rack, in the vicinity of 10 microseconds or less (including the processing time of the IP stack in each server). From the perspective of a WAN operations individual, where latency across part of the country (or around the globe) is typically 10 milliseconds or even more than 100 milliseconds, the latency inside a datacenter is such a small part of the total, it might as well be zero.

Similarly, you can connect two computers in the same room in your home using Gigabit Ethernet with an inexpensive switch and a cable for $25 or less. However, if you want a gigabit connection to the Internet, depending on where you are, it will run from a few hundred to several thousand dollars per month.

All of this actually has a lot to do with deploying visibility and network monitoring solutions.

From a cost standpoint, for most data centers, the scarce resource that needs to be optimized is the server. If applications are waiting on the network because of bottlenecks, then adding network capacity with more NICs or upgrading to a higher speed is a pretty straightforward cost to justify.

So, while monitoring equipment is used to examine what’s going on in the network, the motivation behind it usually has to do with maximizing the performance of the applications and minimizing bottlenecks in the network so that applications aren’t lagging. If the server interconnect isn’t being fully utilized, it usually isn’t a big issue, because the cost is low relative to the server and applications cost.

Still, many organizations are careful about not overspending on monitoring equipment. If there’s a concern about network bottlenecks, adding bandwidth is inexpensive compared to adding more monitoring Taps.

In the WAN, the economics are reversed. The cost of the links in some places exceeds $1 million per kilometer. The economics drive network operators to focus on high utilization of the WAN links, and compared to the cost of the link, additional network monitoring equipment that can help improve link utilization is usually money well spent.

By: Harry Quackenboss

By: Harry Quackenboss